BASIC PRINCIPLES

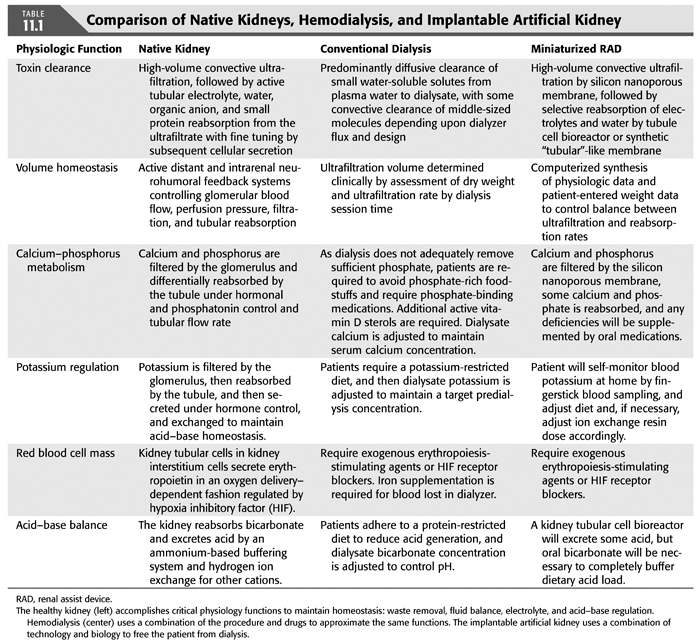

Dialysis practices vary around the world, but in the United States, it is generally performed three times weekly in an outpatient unit using an extracorporeal circuit that includes a semipermeable membrane. Given that the specific toxins responsible for uremia remain imperfectly defined, there has never been a full understanding of the best metric for effective dialysis. Reliance on measures of small and middle molecular clearance has given us the ability to develop dialysis membranes that have become increasingly effective and provides a starting point for the evaluation of newer artificial dialysis systems. A comparison of the various physiologic functions as performed by native kidneys, conventional dialysis, and newer implantable options is outlined in TABLE 11.1.

Small Molecule Clearance

Small molecules have long been the target of dialysis assessment, with a focus particularly on urea which serves as a small molecular weight marker for the variety of other uremic toxins that accumulate in ESKD. While often treated collectively, these molecules behave differently according to their molecular characteristics, interactions with the dialysis membrane and, most importantly, their kinetic behaviors between and within various body compartments. The differences in urea and phosphorus clearance are illustrative of the variance in clearance.

Urea

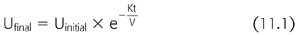

Having a molecular weight of approximately 60 Da and a large volume of distribution, urea is an endogenously produced molecule that is generated as a product of protein degradation. Protein metabolism leads to the endogenous production of urea at a rate of approximately 10 to 13 mg/min. Dialysis is able to remove small solutes, like urea, in direct proportion to their concentration in the blood. Typically, a single compartment model for urea removal is used to estimate the clearance of urea (EQUATION 11.1):

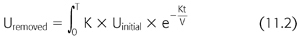

In this model, Ufinal and Uinitial represent the urea concentration at the beginning and the end of the hemodialysis session, respectively. Here, K is the instantaneous clearance, a parameter that is governed by the membrane characteristics, and the dialysis flow rate, though is predominately limited by the blood flow rate. Time is represented by t, and V represents the volume of distribution. The targeted urea removal for a given dialysis session is typically a single-pool Kt/V of 1.2 to 1.4. A Kt/V of one equates to the clearance of 1.0 volume of distribution. The targeted Kt/V of 1.2 to 1.4 allows for slightly greater clearance and to account for urea rebound from the unmodeled peripheral compartment. It is possible to further quantify urea removal by solving for the following (EQUATION 11.2):

Given that the urea generation between dialysis sessions is approximately 28 to 43 g, we can then determine what the expected initial urea concentration is in these patients in order for urea removal to match urea production. As noted above, K is primarily limited by dialysis blood flow, so if we assume that K = 350 to 400 mL/min, we can see that predialysis blood urea nitrogen (BUN) must rise to 70 mg/dL or higher in order to remove the urea at its production rate of 10 mg/min or greater. Indeed, in practice, it is understood that patients who do not reach this threshold in their azotemia—barring significant residual renal function—are typically suffering from being undernourished and do not have adequate protein intake. This has been found, through observational assessment of other clinical metrics, to be strongly associated with mortality in these patients.

Phosphorus

Phosphorus is another small molecule, like urea, that is able to pass through the dialysis membrane but does so slightly less ably than the slightly larger creatinine. The difference in behaviors is largely attributable to the negative charge carried by phosphorous in combination with the water of hydration. However, unlike urea, which mobilizes relatively quickly from the peripheral compartment for dialytic clearance and approaches a one-compartment kinetic model, phosphorus is better described with two-compartment modeling and probably a multicompartment model. Phosphorus concentrations very quickly fall to a low level in the plasma compartment during dialysis but mobilize slowly from peripheral tissues and achieve a concentration postdialysis that approaches initial starting concentration.

To explore this more fully, the average dialysis patient, who is pursuing the desired 1 to 1.2 g/kg/day of protein, consumes approximately 780 to 1,450 mg of phosphorus daily, of which approximately 60% to 80% is absorbed in the gastrointestinal tract. Four-hour dialysis treatments are only able to remove approximately 1,000 mg of phosphorus per treatment, which is sufficient to maintain normal phosphorus levels in only a minority of patients. As a consequence, most dialysis patients are put on oral phosphorus binders which can reduce gastrointestinal absorption to approximately 40% or 400 to 650 mg daily.

In contrast to intermittent dialysis, continuous renal replacement therapies (CRRTs) provide significant phosphorous clearance. In these patients, the slow mobilization from peripheral tissues allows phosphorous to be removed by the dialysis modality. If critically ill patients are left on continuous therapy for any length of time, their limited oral intake often leads to profound hypophosphatemia and requires supplemental phosphorus.

Middle Molecule Clearance

Middle molecular weight molecules include those that range from approximately 2 kDa (~20 times the size of creatinine) to 45 kDa. The most commonly studied middle molecule is β2-microglobulin. It is a component of the major histocompatibility complex and in normal physiology is shed from the cell surface during regular cell turnover and then is eliminated, almost exclusively, by the kidney (4). Despite being readily able to pass through the kidney filtration barrier, it is relatively poorly cleared by traditional hemodialysis filters. Even so-called “high-flux” dialyzers have β2-microglobulin clearances that are only one-tenth to one-fifth that of urea (5). If serum concentrations are sufficiently high, β2-microglobulin can go on to polymerize and coalesce in amyloid plaques that have been found in nearly every major organ system. Common manifestations include nerve entrapment syndromes like carpal tunnel syndrome and joint disease, but it has been implicated in cardiovascular disease and neurologic plaques as well in ESKD patients (6–8).

The clearance of these middle molecules is largely a consequence of the dialysis membrane “cut-off” or pore size maximum, although it is important to recognize that even in an idealized membrane, pore size is not completely uniform, and solute transport does not abruptly stop at some given or defined molecular size. That is to say that there are pores that vary around a certain mean. In order to effectively facilitate transport of these middle molecules, while avoiding transport of so-called large molecules, like albumin with a molecular weight of 66.5 kDa, the manufacturer must engineer a pore size sufficient to span the size of interest but to tail off before larger serum proteins are given passage.

Salt and Water Management

The native kidneys are wonderfully adept at managing extracellular fluid volume and osmolality within very finely regulated margins. That regulation is the product of interwoven and overlapping neurohormonal feedback loops that allow for accommodation of widely varying intake. In patients who are dependent on dialysis, those hormonal signals that would normally influence kidney sodium handling go unanswered and salt and water removal are driven mechanically through modulation of the dialysis concentrations and ultrafiltration (UF) rates set at the bedside.

The average fluid intake in adults is approximately 1.5 L/day; an additional 350 to 400 mL/day of water is generated through the metabolism of carbohydrates. This “intake” is balanced in adults by fecal losses averaging 100 mL/day, insensible losses of about 400 to 600 mL/day, and urinary output. In balance, using the above values—which vary widely between individuals—that leaves approximately 1.3 L/day of water that must be excreted by the kidneys in order to stay in steady state. From a dialysis perspective, this means that three weekly sessions of intermittent hemodialysis must remove approximately 9 L of water per week that must be removed in dialysis-dependent patients who lack residual renal function. Thrice-weekly dialysis, then, requires 3 L be removed per treatment which, depending on the size of the patient, can be both physiologically taxing and technically challenging. The physiologic stresses, in particular, have been suggested as the primary driver behind increased cardiovascular mortality, with high UF rates showing correlation negative outcomes (9–11).

Sodium management is also intimately tied to the dialysis gradients established across the semipermeable membrane. Sodium balance is generally achieved by dialyzing patients against a dialysis bath containing 140 mmol/L of sodium, matching the serum concentration. Higher sodium baths tend to lead to higher interdialytic weight gain and higher blood pressures. Lower sodium dialysate baths on thrice-weekly hemodialysis tend to lead to intradialytic hypotension (12). There is some preliminary data on using lower sodium dialysate solutions with nocturnal or frequent dialysis to maintain slight negative sodium balance, but the long-term effects of this technique remain unknown (13). Obviously, in developing an artificial kidney, implantable, wearable, or otherwise “portable,” all of these clearances need to be collectively considered.

Wearable Dialysis Options

The first dialysis performed to treat chronic kidney disease (CKD) was performed in Seattle in March of 1960 (14). Since that time, a quest has been underway to balance the medical and mechanical demands of dialysis with the patients’ desires to live full and productive lives. It is important to realize that in the 1970s, just as the U.S. Congress was preparing to grant nearly universal health coverage to Americans with ESKD, most patients who were dependent on chronic dialysis were performing the procedure in their own homes (15) and were largely homebound. At that time, there was an interest in developing portable kidney replacement options to help liberate these patients from their homes; literature published during that era investigates the theoretical possibility of portable dialysis (16–19). However, as Medicare reimbursement allowed for a rapid growth in the CKD patient population, an extensive network of dialysis clinics was developed, which eliminated the homebound nature of the therapy by not only allowing for movement to and from treatments but also allowing for travel accommodations as needed.

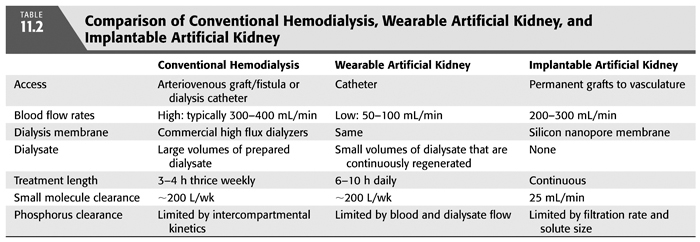

Recently, however, interest in portable therapies has resumed as observational data accumulates indicating that longer dialysis sessions may be beneficial with respect to patient outcomes (20). While incompletely understood, these longer sessions likely have less negative physiologic impact through slow UF. The improved solute control—as evidenced by the superior phosphorus management seen in these patients—may also lend itself to improved outcomes. Collectively, these observations have led to renewed interest in possible slow continuous dialysis using wearable or implantable systems, which are compared with conventional hemodialysis in TABLE 11.2, although these systems have their attendant challenges.

First among these challenges is the necessity of vascular access. Hemodialysis depends on reliable vascular access facilitating an unimpeded supply from and return to patients’ circulation (21). Significant data has shown the superiority of arteriovenous fistulae or grafts to catheter access. Fistula or graft access requires needle access and secure connections. Indeed, the risk of accidental venous needle disconnect is great, given the high throughput of blood. In standard dialysis treatments, with a blood flow rate of 400 mL/min, exsanguination could occur in less than 15 minutes. In a dialysis unit, safety is ensured by stabilizing the involved limb and maintaining direct visualization through the duration of treatment. With a wearable system, the fundamental driving impetus for which is mobility to better suit patient lifestyles, this vascular might not necessarily be so stable. Indeed a dislodged line could quickly become disastrous. While catheters may provide additional stability, they have been linked to increased infectious risk and indeed may be related to ongoing inflammatory activation (22,23), and possible association with cardiovascular and other disease states (11).

The volume of required dialysate is another logistical challenge. In order to maintain the electrochemical gradients required to provide dialysis in the limited time allotted for traditional thrice-weekly dialysis, mandate that the dialysis flow rates exceed the blood flow with maximal dialysis achieved with countercurrent flows. In conventional thrice-weekly dialysis, the dialysis flow rates—typically in excess of 600 mL/min—lead to the use of approximately 200 L of dialysate per treatment. Other therapies that have allowed for less intensive but more frequent dialysis have limited the amount of dialysis needed at home, with six times weekly dialysis requiring lower dialysate flows and thus only 30 to 50 L of dialysate per treatment. One novel strategy that has been used to regenerate fresh dialysate form waste dialysate is the application of solute sorbent materials to the circuit.

Solute removal devices that utilize sorbent materials have been in commercial production for decades. Initially developed by National Aeronautics and Space Administration (NASA) for water purification on manned spaceflight missions, these solute removal devices have since been adapted for use in dialysis systems (24). The REDY system, standing for REcirculation of DialYsate, was introduced commercially in the 1970s and remains available for use. The sorbent materials are able to remove various elements of spent dialysate in several steps to regenerate usable dialysate solutions. It is worth reviewing the details of this process briefly.

Spent dialysate, of course, contains metabolic toxins that need to be removed if that dialysate is meant to be reused. These include urea, potassium, phosphorus, and a variety of organic acids. By moving that dialysate through several steps, one can remove not only those known toxins but presumably many other metabolites that are removed by dialysis. In these systems, the dialysate is first exposed to urease, which cleaves the urea to carbon dioxide and ammonia. The newly formed ammonia scavenges free protons to form NH4+ given that the pKa of the ammonia–ammonium system (NH3/NH4+) of around 9, it favors a protonated form in dialysate with a neutral pH. This is the first step in excreting dietary acid. The dialysate then moves through a cation exchange resin and then an anion exchange resin to remove potassium and ammonium, and then phosphorus and sulfate, respectively. Other cationic and anionic solutes removed by dialysis are also eliminated in these columns, including calcium and magnesium. Lastly, the dialysate moves through an activated charcoal filter to remove organic solutes. Typically, because of the removal of calcium and magnesium by the cationic resin and removal of bicarbonate by the anionic resin, these must be replaced to some extent before returning the dialysate for use as well (24,25).

These sorbent systems have become an important element among the wearable dialysis options currently being explored for wider market.

Peritoneal Dialysis

Peritoneal dialysis (PD) can be described as the first “wearable” dialysis option. It only requires intermittent exchanges of peritoneal dialysate and allows patients to fit their treatment to their lives, contrary to the more rigid schedules demanded by in-center hemodialysis. PD also has drawbacks, including a significant commitment on the part of the patient with potentially many hours per week devoted to treatments, as well a large storage area and reserve for PD fluid is required. As early as 1976, Raja (25) reported the use of the REDY system to recycle spent PD fluid for reuse.

Presently, a commercially available option for PD patients is being developed by the Singapore-based Automated Wearable Artificial Kidney (AWAK) Technologies. Their device uses urease, zirconium oxide, and zirconium phosphate along with activated charcoal to facilitate peritoneal dialysate regeneration as described above. Unlike in standard hemodialysis, PD requires an osmotic gradient to facilitate water removal in these patients. The AWAK system, then, not only replaces the adsorbed salts after purification but also replaces glucose in the solution to maintain that gradient.

Another distinct difference between the standard PD routine and the AWAK system is the use of tidal dialysis. While typically, patients will infuse large volumes with long dwells, tidal dialysis allows for small volumes, with short dwells and almost continuous exchange. According to the published literature on these systems, approximately 4 L/hr is exchanged in patients using their systems (26) with achieved clearances that are superior to standard PD. Whether there is any improvement in observed patient outcomes like mortality, using this modality remain to be tested.

Ronco describes a similar device, the Vicenza Wearable Artificial Kidney for Peritoneal Dialysis (ViWAK) that also utilizes a specialized PD catheter with an inflow and outflow lumen—the Ronco catheter—and a small wearable cartridge containing the sorbent materials. The initial morning dwell is done manually by the patient, and after approximately 2 hours, when approximately 50% dialysate/plasma equilibrium has been achieved, recirculation is activated for the ensuing 10 hours or so, at a rate of 20 mL/min. Again, this allows for high daily clearances, and a weekly clearance >100 L/wk (27,28).

Hemodialysis

Just as there have been advances using these sorbent regenerated dialysis models in hemodialysis, so too have advances been made in so-called continuous ambulatory hemodialysis with two major current extracorporeal hemodialysis options being explored. The Wearable Artificial Kidney, or WAK, was trialed in Vincenza, Italy, in the mid-2000s and recently (2015) completed an initial human trial in the United States. As noted previously, while we are accustomed to high blood flow rates when patients are receiving extracorporeal kidney support, these are a mandate not of the modality but of the short treatment time offered to our outpatients. Indeed, the device currently being explored was described by Gura first in 2005 using off-the-shelf dialyzer membranes. The dialysate is circulated through a miniaturized system using the REDY components that reconstitutes the dialysate and then a series of syringes add the additional salts to the solution; heparin is added to the blood circuit. The entire device weighs approximately 5 kg.

The earliest clinical studies of this device were on patients requiring additional UF that could be provided by routine dialysis (29). This was followed by experimental use in a small cohort of ESKD patients. Blood flow rates of 50 mL/min were achieved and total urea clearances of approximately 22 mL/min. Unfortunately, access indeed proved to be a hurdle with two of the eight patients experiencing catheter thrombosis and at least one episode of needle dislodgment during treatment, although safety measures prevented significant blood loss and treatment continued after needle replacement (30). As with traditional CRRT options, phosphate and β2-microglobulin clearances were much higher than they are on thrice-weekly dialysis, again supporting the idea that mobilization from peripheral compartments is the fundamental problem in achieving higher clearances on dialysis (31).

The Netherlands-based Nanodialysis Company has developed a slightly different approach that deviates from the REDY sorbent design described above. Instead of using disposable cartridges, a regenerable sodium polystyrene-based cartridge is used for cation exchange. With this device, the sodium polystyrene resin allows for displacement of sodium ions for cations in the dialysate that need to be expunged (potassium, ammonium, etc.). After a certain “lifetime,” the polystyrene is bathed again in a high concentration sodium bath, which displaces those cations that accumulated during dialysate exposure (32). In addition to reusable cation exchangers, they have also opted to use an electrochemical reaction to eliminate urea from the dialysate. With electrodes placed into the system, an electrical current oxidizes the spent urea into nitrogen, hydrogen, and carbon dioxide. This allows for less risk of urease failure or worse yet, urease breakthrough, but trades out that problem for the issues associated with gas production and heavy metal use with risk of metal ionization into the dialysate (33).

Implantable Renal Replacement Systems

Indeed, the pinnacle of this field’s aspiration is to achieve a bioengineered implantable device that would overcome the concerns associated with both infection and with access failure while providing adequate dialytic clearance to manage the metabolic consequences of ESKD. While wearable therapies emphasize the use of regenerable dialysate solutions and have minimized the concerns associated with dialyzer exchange simply because of accessibility, these parts would pose inherent issues to implantable devices as intermittent replacement would be necessary. While the membrane is likely a requisite piece of an implantable strategy and its lifespan will determine the frequency of exchange, successful strategies will likely forego the use of dialysis.

Recellularization

Great interest has arisen around the prospect of rejuvenating a failed kidney by either reawakening its own cellular apparatus, or by replacing or repurposing other cell types to provide kidney function on an implanted engineered device. Both of these prospective treatment techniques share common features that lend themselves to review here.

While many different researchers have developed basic science techniques to harvest, grow, and influence differentiation of kidney tissues, those that have demonstrated the most promise include somatic cell nuclear transfer, and recellularizing organ matrices for implantation.

Somatic cell nuclear transfer involves generation of syngeneic donor tissue for a variety of uses. The tissues are generated by harvesting the nuclei of a somatic cell and then implanting that into an enucleated oocyte. The resulting embryonic or fetal tissue is then harvested for use (34). In the case of the kidney, those cells could theoretically be used to replace lost kidney tissues. More recent work has focused on using dedifferentiation signaling to change terminally differentiated cells of the host to return to pluripotency and then deliver these to the organ of interest (35). While promising, with tissues showing some histology consistent with kidney tissues, neither of these techniques have yet shown full reconstitution of the nephron.

Alternatively, some researchers have devised ways to use the matrix of an existing decellularized kidney as the structure of the kidney and then adding cellular elements in the hopes of recreating complete kidney architecture. This has the benefit of providing the superstructure necessary for nephron and vascular scaffolding. The earliest work in this area was on cardiac tissue with recapitulation of myocyte architecture and appropriate contractile orientations (36). However, the kidney architecture is significantly more complex and the process of recellularizing a bit more intensive. In 2013, Song et al. (37) published work wherein a decellularized rat kidney was seeded with human umbilical vein endothelial cells from the arterial circulation and neonatal rat kidney cells were seeded from the lower genitourinary tract. Histologically, structures similar to glomeruli and tubules were seen, though in vitro testing of the implants that were inferior to matched cadaveric kidneys with less stringent filtration specificity (37).

Implantable Devices

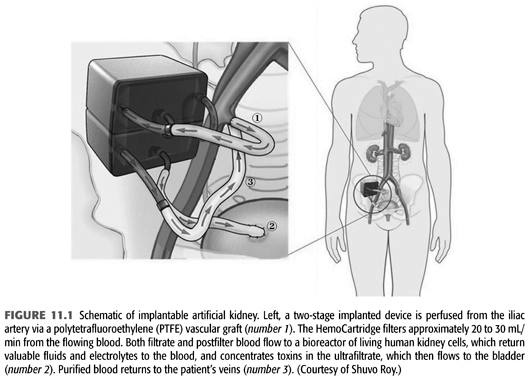

An alternate approach has been to develop a bioengineered device that could be implanted to permanently manage ESKD. Current concepts, being pioneered by Fissell and Roy, would involve two functional elements, similar to those in a native kidney—the first component would be akin to the glomerulus, generating a large volume filtrate. The filtrate would proceed on to the bioreactor which would function much like the tubular portion of the nephron does, modifying that filtrate through secretion and reabsorption to optimize the waste product outflow. A schematic of one possible design is seen in FIGURE 11.1.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree