Graft and Patient Survival

Karl L. Womer

Herwig-Ulf Meier-Kriesche

Bruce Kaplan

University of Florida College of Medicine, Gainesville, Florida 32610-0224

INTRODUCTION

Patient Survival

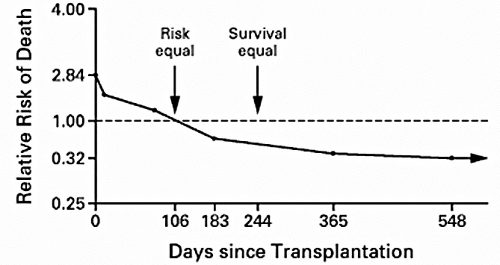

There is general consensus that the optimal treatment for most patients with end-stage renal disease (ESRD) is renal transplantation. Benefits have been classically described in terms of improved quality of life (1, 2, 3, 4) and reduced medical expense (2,5). According to the 2001 United Network for Organ Sharing (UNOS) report (6), current 1- and 5-year patient survival rates for recipients of cadaveric kidney transplants are 95% and 81%, respectively, and 98% and 91%, respectively, for living donor transplant patients. Improvements in patient survival after cadaveric transplantation have been demonstrated in several studies (7, 8, 9, 10). However, randomized trials comparing survival during dialysis treatment and after transplantation are neither feasible nor ethical. Moreover, transplant recipients are derived from a highly selected subgroup of patients on dialysis who are on average younger and healthier and of higher socioeconomic status than those dialysis patients not selected for transplantation. Wolfe et al (11) avoided this selection bias by merging data from the U.S. Renal Data System (USRDS) with information about the renal transplantation waiting list from the Scientific Renal Transplant Registry (SRTR) operated by UNOS and found large long-term benefits for cadaveric transplantation versus maintenance dialysis, despite the increased short-term risk of death after transplantation (Fig. 1.1). The relative survival benefits of transplantation were similar for men and women, and although more profound in diabetics, were extended to subgroups of patients with other causes of ESRD. When the results were analyzed according to race, transplantation reduced the long-term relative risk (RR) of death more among Asians and whites than among Native Americans and blacks. However, in all four racial groups, transplantation significantly reduced the long-term risk of death. Likewise, although the greatest difference in long-term survival was found among patients who were 20 to 39 years old at the time of placement on the waiting list, improvements were still noted in patients who were 70 to 74 years old. The findings by Wolfe et al provided a convincing answer to the most basic question about therapy for patients with ESRD and refocused attention on kidney transplantation as a life-prolonging rather than merely a life-enhancing procedure.

Graft Survival

The era of clinical organ transplantation began in 1962 with the discovery that azathioprine was clinically useful as an immunosuppressive drug. One-year cadaveric renal allograft survival, previously zero, was increased by the combination of prednisone and azathioprine to 45% to 50%. By the 1970s, it was clear that transplantation of kidneys from living related donors resulted in better initial and long-term graft survival rates than those of kidneys from cadaveric donors. After cyclosporine (12,13) and muromonab-CD3 (OKT3 monoclonal antibody) (14,15) were introduced into clinical practice in the early 1980s, 1-year survival rates for renal allografts improved from approximately 60% to between 80% and 90%. From 1988 to 1996, 1-year graft survival increased from 89% to 94% for recipients of living donors and from 77% to 88% for cadaveric donor recipients (16). In the 1990s, the introduction of new immunosuppressive agents led to a marked decrease in the incidence of acute rejection (17). The 2001 UNOS report (6) shows that the improvements in 1-year graft survival have increased further to 89% and 95% for cadaveric and living donor transplants, respectively.

TABLE 1.1. Projected half-life of renal transplants from 1988-1995 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Using the SRTR, Hariharan et al (16) reported that the projected half-life of grafts has improved progressively from 7.9 to 13.8 years for a cadaveric donor and 12.7 to 21.6 years for a living donor for the period from 1988 to 1995 (Table 1.1). This improvement was not wholly attributable to any of the newer immunosuppressive drugs, as it took place in the era of treatment with cyclosporine, azathioprine, and prednisone. Given these findings, more attention is being paid not only to immunologic but also to nonimmunologic strategies that may improve long-term outcomes through prevention of late allograft loss (18). This chapter will focus on pre- and posttransplant donor and recipient risk factors important to graft and patient survival as well as the effects of pharmacologic advances on these outcome measures.

CAUSES OF GRAFT LOSS: MAJOR ETIOLOGIES

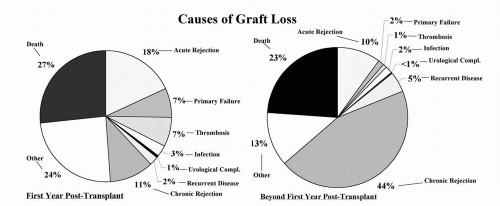

The major causes of renal allograft loss are acute renal injury, death with a functioning graft, chronic allograft nephropathy (CAN), and recurrent disease. However, the relative importance of these etiologies operates differentially over time (Fig. 1.2). In the early posttransplantation period, nonfunction, technical complications, and acute rejection (AR) remain the leading causes of graft loss. However, for the purposes of the chapter, only those etiologies responsible for late allograft loss will be reviewed.

Death with a Functioning Allograft

Data have now emerged to highlight the importance of death with a functioning allograft as a major contributor to late allograft loss (19, 20, 21, 22, 23), particularly as more elderly patients are being transplanted. Depending on the age and comorbidities of the patient population, the transplant era, and the posttransplant interval being studied, death with a functioning allograft ranks as first or second among the leading causes of graft loss (19, 20, 21,24). Death with graft function is usually due to comorbidities, such as heart disease, infection, and

cancer. Many of these conditions can be modified by the immunosuppressive medications utilized in transplant patients and by the level of renal function maintained by the graft. Analysis of graft loss independent of these deaths (death-censored graft loss) allows one to approximate risks for injury to the graft itself, but also may lead to bias by not taking into account factors that may influence both endpoints. Although large interventional trials designed to minimize patient death in the transplant population are not available to establish proof of efficacy, as in the general population, the usual measures aimed at modifying risk factors for patient death, particularly cardiovascular death, are generally applied (19). These measures include weight loss, smoking cessation, strict glycemic control, lipid-lowering therapy, aspirin, and correction of anemia. Most centers aggressively monitor and treat infection in transplant patients and attempt to minimize immunosuppression in those patients at high risk for infections, particularly the elderly. General guidelines for cancer screening are usually followed.

cancer. Many of these conditions can be modified by the immunosuppressive medications utilized in transplant patients and by the level of renal function maintained by the graft. Analysis of graft loss independent of these deaths (death-censored graft loss) allows one to approximate risks for injury to the graft itself, but also may lead to bias by not taking into account factors that may influence both endpoints. Although large interventional trials designed to minimize patient death in the transplant population are not available to establish proof of efficacy, as in the general population, the usual measures aimed at modifying risk factors for patient death, particularly cardiovascular death, are generally applied (19). These measures include weight loss, smoking cessation, strict glycemic control, lipid-lowering therapy, aspirin, and correction of anemia. Most centers aggressively monitor and treat infection in transplant patients and attempt to minimize immunosuppression in those patients at high risk for infections, particularly the elderly. General guidelines for cancer screening are usually followed.

Chronic Allograft Nephropathy

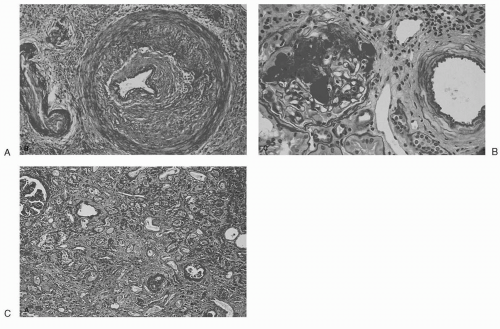

CAN is a poorly defined clinical-pathological entity manifested clinically by a gradual decrease in renal function over months to years after transplantation, coupled with hypertension (HTN) and variable degrees of proteinuria (25). Histopathologic findings include widespread obliterative vasculopathy, glomerulosclerosis and interstitial fibrosis with tubular atrophy (Fig. 1.3). Rarely, transplant glomerulopathy, with splitting of the glomerular basement membrane, is seen. However, these changes overlap those of human aging and age-related diseases in native kidneys. Thus, CAN is better considered a final common pathway of a variety of stresses to renal tissue (20). Both immunologic and nonimmunologic factors have been shown to contribute to CAN (26), although these factors should not be considered mutually exclusive.

Of the immunologic factors, AR (particularly severe, recurrent, and late) remains one of the highest risk factors for the development of CAN. Further evidence for immunologic mechanisms stems from observations that the half-life of cadaveric renal allografts is longer when donated from human leukocyte antigen (HLA)-matched versus HLA-mismatched donors (27). Moreover, in a study by Legendre et al (28), 2-year protocol biopsies on HLA-identical but not HLA-mismatched kidneys from patients that did not have episodes of AR or acute renal failure (ARF) showed no histopathologic findings of CAN. Both cellular (29, 30, 31, 32) and humoral (33,34) mechanisms likely play a role in the pathogenesis of CAN, although the relative contribution of each is still the subject of intense debate. Nonimmunologic factors include brain death in the donor, ischemia-reperfusion injury, inadequate donor kidney nephron mass, calcineurin inhibitor toxicity, hypertension, hyperlipidemia, cytomegalovirus (CMV) infection, and polyoma virus nephropathy.

To date, there have been no convincing studies to demonstrate that secondary intervention is effective in reducing the rate of graft loss due to CAN. The ultimate goal in transplantation is to achieve a state of tolerance (35), which would ideally eliminate the immunologic factors, but which may also serve to attenuate the effects of the nonimmunologic factors (36). It is clear that any successful strategy will need to be broad-based to account for the immunologic, physiologic, infectious, and metabolic aspects of this difficult problem.

Recurrent Disease

Glomerulonephritis (GN) is a major cause of ESRD and is the primary disease in roughly 30% to 50% of patients undergoing renal transplantation (24,37). Accurate estimates

of the incidence of recurrent GN and its associated impact on graft survival are hampered by the paucity of cases seen within any single transplant center. Results from small studies should be interpreted with caution, and although reports from data registries give good estimates of rates of graft loss, they often lack information on recurrence rates and clinical outcomes. Recurrence has been reported in 6.0% to 19.4% of renal allograft recipients, and the prevalence increases with the duration of follow-up (24,38, 39, 40, 41). Those patients with recurrence have a higher rate of graft loss, with recurrence reported as the cause of loss in 1.1% to 8.4% of transplant recipients (24,38, 39, 40, 41, 42).

of the incidence of recurrent GN and its associated impact on graft survival are hampered by the paucity of cases seen within any single transplant center. Results from small studies should be interpreted with caution, and although reports from data registries give good estimates of rates of graft loss, they often lack information on recurrence rates and clinical outcomes. Recurrence has been reported in 6.0% to 19.4% of renal allograft recipients, and the prevalence increases with the duration of follow-up (24,38, 39, 40, 41). Those patients with recurrence have a higher rate of graft loss, with recurrence reported as the cause of loss in 1.1% to 8.4% of transplant recipients (24,38, 39, 40, 41, 42).

FIG. 1.3. Characteristic histopathologic findings in CAN: (A) obliterative vasculopathy, (B) glomerulosclerosis, (C) interstitial fibrosis. (From Sayegh MH. Kidney Int 1999;56:1967, with permission.) |

When interpreting rates of recurrence, several issues require consideration. First, an accurate histologic diagnosis is essential both pre- and posttransplantation. Unfortunately, many patients reach ESRD without a definitive renal biopsy, and most renal transplant centers do not routinely perform diagnostic biopsies on patients with proteinuria and/or chronic allograft dysfunction. These scenarios lead to underdiagnosis and therefore an underreporting of the true recurrence rate. Second, the lack of specificity in the pathologic features of some conditions often leads to errors in diagnosis. Compounding this problem is the frequent occurrence of coexisting glomerular pathologies. Third, recurrence rates can be complicated by the existence of de novo glomerular disease. Finally, the duration of follow-up of a particular study is important, since the impact of recurrence on graft survival will increase with longer periods of follow-up.

Using data compiled by the Australian and New Zealand Dialysis and Transplant Registry, Briganti et al (24) studied the incidence, timing, and relative importance of allograft loss due to the recurrence of GN in 1,505 renal transplant recipients with biopsy-proved GN as the cause of ESRD. At 10-years posttransplantation, the incidence of allograft loss due to recurrent GN was 8.4%, as compared with 4.1% for loss due to acute rejection, 15.0% for loss due to death with a functioning allograft, and 20.3% for loss due to CAN, making recurrent GN the third most common cause of allograft loss in this patient population. Allograft loss due to recurrent GN occurred in patients with focal segmental glomerulosclerosis (FSGS), mesangiocapillary glomerulonephritis type I (MCGN-I), MCGN type III, IgA nephropathy, Henoch-Schoenlein purpura, membranous nephropathy, and pauci-immune crescentic GN. The greatest incidence of allograft loss occurred among patients with FSGS and MCGN-I, and allograft loss tended to occur earlier in these patients. The 10-year incidence of allograft loss from any cause was similar among recipients with biopsy-proved GN and among those with renal failure from other causes. Since the incidence of allograft loss due to GN increases over time, recurrent GN will likely become an increasingly important cause of allograft

loss as overall long-term graft survival rates continue to improve.

loss as overall long-term graft survival rates continue to improve.

RISK FACTORS FOR PATIENT AND GRAFT SURVIVAL

Pretransplant Factors

Waiting Time/Preemptive Transplantation

As presented earlier, transplantation has been shown to improve survival when compared with maintenance dialysis for patients with ESRD (11). Since the incidence of treated ESRD has nearly doubled over the past decade, with no substantial growth in the cadaveric donor kidney pool, the waiting time to receive a renal allograft has been steadily increasing (43). Earlier, retrospective single-center (10) and small database (9) analyses yielded conflicting results regarding the effect of length of pretransplant dialysis on patient and graft survival. The argument that time on dialysis itself is an independent risk factor for graft loss was strengthened by a subsequent large, retrospective study based on USRDS data showing a clear dose-dependent detrimental effect of dialysis time on transplant outcomes for both patient and graft survival, and somewhat surprising, also for death-censored graft survival (44). These findings held true for both cadaveric and living transplantation and, importantly, were proportional across different primary disease groups, suggesting that the risk of increased ESRD is not simply related to cumulative disease burden alone (i.e., diabetes mellitus or HTN versus GN).

These data were further substantiated by Mange et al (45), who demonstrated in a retrospective cohort study of 8,481 patients that preemptive transplantation in living donor transplant recipients was associated with a 52% reduction in the risk of allograft failure during the first year after transplantation, an 82% reduction during the second year, and an 86% reduction during subsequent years, as compared with transplantation after dialysis. Furthermore, increasing duration of dialysis was associated with increasing odds of AR within 6 months after transplantation, suggesting that dialysis may alter the immune status of patients with chronic kidney disease (CKD) as an explanation for the increased incidence of graft loss. Kasiske et al (46) studied this issue further by examining outcomes of patients who receive preemptive kidney transplants. Retrospective analysis was performed on 38,836 first-kidney transplants carried out between 1995 and 1998. Overall, 13.2% of the transplants were preemptive, which comprised 7.7% of the total number of cadaveric transplants and 24% of the total number of living donor transplants. Preemptive transplant recipients were more likely to be less than 17 years old, white, actively working, privately insured, college graduates, and transplanted with kidneys having fewer HLA mismatches (all patient characteristics shown to favor improved graft survival). However, multivariate analysis demonstrated that the beneficial effect of preemptive transplantation on graft survival was independent of other pretransplant characteristics.

To remove potential donor-related confounding factors present in previous studies, an analysis of 2,405 kidneys pairs harvested from the same donor but transplanted into recipients with different ESRD time was performed (47). Five- and 10-year unadjusted graft- and death-censored graft survival rates were significantly worse in paired kidney recipients who had undergone more than 24 months of dialysis compared with paired kidney recipients who had undergone less than 6 months of dialysis. Although advanced age, African American race, and higher panel-reactive antibody (PRA) percentage were characteristics of the recipient population, when adjustments in the multivariate analysis for these risk factors were made, the trend was maintained. Important to these findings was the exclusion of six-antigen-matched transplants, since the current national sharing policy results in a higher proportion of such kidneys transplanted preemptively, regardless of recipient waiting time. In a follow-up study using a nontraditional endpoint, death after allograft loss (DAGL), risk factors were analyzed for a total of 78,564 primary renal transplant patients reported to the USRDS from 1988 to 1998 (48). By Cox model, dialysis for more than 2 years was associated with a more than twofold RR for DAGL, while transplant time was not associated with DAGL. These findings suggest that time with ESRD is a strong, independent, and potentially modifiable risk factor for renal transplant outcomes and emphasize the need for timely referral of all ESRD patients for transplantation.

HLA Matching

Although improvements in immunosuppression have diminished its relative importance, HLA matching continues to be a major determinant of survival in large retrospective analyses (49). Held et al (50) studied a series of Medicare patients receiving a first cadaveric kidney transplant between 1984 and 1990. Both multivariate and univariate estimates indicate that grafts with fewer HLA mismatches tend to survive longer. The survival of grafts with no mismatches is substantially better than that of grafts with one mismatch, whereas the survival of grafts with one to six mismatches is more homogenous. The most recent UNOS report confirms these findings, showing that the 1-year and 5-year graft survival rates for six-antigen-matched cadaveric kidney transplants are 94.9% and 68.2%, respectively, as compared with 91.1% and 55.3%, respectively, for zero-matched cadaveric transplants (6). Likewise, a six-antigen-matched living donated kidney has a 5-year graft survival of 87% compared with 57% for a zero-antigen-matched kidney (6).

Through an agreement among U.S. transplant centers, UNOS established a program in 1987 to ship kidneys anywhere in the country to recipients who have the same HLA-A, B, and DR antigens as the donor. The HLA matching policy has been liberalized twice, to include kidneys with the

same constellation of HLA antigens as the recipient even though fewer than six antigens were identified, and again to include zero-A, B, DR-mismatched kidneys (51). Takemoto et al (52) recently examined the results of the national kidney-sharing program after over 10 years of operation. The survival rate of HLA-matched kidneys was similar regardless of the criteria followed and was significantly higher than those for HLA-mismatched transplants (half-life of 12.5 years versus 8.6 years, respectively). The benefit of HLA matching was diminished in kidneys from older donors, with the difference in projected 10-year rates of graft survival between HLA-matched and HLA-mismatched recipients greatest (28%) among those whose donor was 15 years of age or younger and least (10%) among those whose donor was older than 55 years. There was no significant increase in graft survival associated with HLA matching in black recipients. Finally, no improvement in graft survival was noted when cold ischemia time (CIT) was greater than 36 hours. The findings with CIT greater than 36 hours were confirmed in a similar study by separate investigators (53).

same constellation of HLA antigens as the recipient even though fewer than six antigens were identified, and again to include zero-A, B, DR-mismatched kidneys (51). Takemoto et al (52) recently examined the results of the national kidney-sharing program after over 10 years of operation. The survival rate of HLA-matched kidneys was similar regardless of the criteria followed and was significantly higher than those for HLA-mismatched transplants (half-life of 12.5 years versus 8.6 years, respectively). The benefit of HLA matching was diminished in kidneys from older donors, with the difference in projected 10-year rates of graft survival between HLA-matched and HLA-mismatched recipients greatest (28%) among those whose donor was 15 years of age or younger and least (10%) among those whose donor was older than 55 years. There was no significant increase in graft survival associated with HLA matching in black recipients. Finally, no improvement in graft survival was noted when cold ischemia time (CIT) was greater than 36 hours. The findings with CIT greater than 36 hours were confirmed in a similar study by separate investigators (53).

Several other publications have explored further the clinical contexts in which HLA matching is associated with improved graft survival. Using data from UNOS, Mange et al (54) compared the outcomes of organs from the same donors for which one kidney was shipped and one was transplanted locally. In their analysis, a significant association between shipment and allograft survival at 1 year was noted for HLA-mismatched but not HLA-matched kidneys. In a single center study, Asderakis et al (55) showed that ignoring HLA match to achieve a shorter CIT for donors greater than 55 years only resulted in a 3.7% increase in graft survival. Similar results have been reported for other single-center studies aimed at optimizing HLA-independent risk factors for allograft survival at the expense of HLA matching (56,57). In fact, Terasaki et al (58) showed that survival of grafts from living unrelated donors was comparable to that of parental donor grafts, despite significantly higher major histocompatibility complex (MHC) mismatches in the former group. In this same study, survival rates of zero-antigenmismatched cadaveric grafts that functioned immediately were similar to those of spousal grafts. However, the survival rate of grafts mismatched for all six antigens that functioned on the first day was higher than that of a perfectly matched graft that did not function immediately. In summary, HLA matching is only one factor in the complex interplay of risk factors important to long-term graft survival, and there is a point of diminishing returns for any HLA-matching strategy that increases the overall number of risk factors for graft loss.

Donor Source

Live donor (LD) source, even when corrected for ischemic times and delayed graft function (DGF), is one of the strongest factors associated with good graft survival (16,58). This advantage occurs despite higher degrees of HLA mismatching in LD kidneys and does not appear to be affected by donor race, donor age, length of cold ischemia, or the use of preoperative donor transfusions (58). Although better compliance with immunosuppressive medications is a reasonable explanation in the case of spousal donated grafts, survival rates of grafts from other living unrelated donors are similarly high. In all likelihood, the advantages of living donated kidneys are a combination of better quality kidneys undergoing minimal trauma in a better educated, highly motivated recipient of higher socioeconomic status.

Donor Age

One of the strongest risk factors for poor long-term graft survival is advanced donor age (20,59,60). Kidneys from older donors show an increased frequency of adverse features after transplantation, including DGF and an elevated baseline serum creatinine. Although the reason is not entirely clear, decreased functional reserve due to age and age-related diseases such as HTN and vascular disease probably explain the phenomenon. Alternatively, there may exist accelerated aging after transplantation, which would manifest itself more in an older kidney (61). Donor age is also a risk factor for recipient death with a functioning allograft (60), which likely reflects the known association of cardiovascular death with chronic kidney disease (62, 63, 64) and more recently, with elevated serum creatinine at 1-year posttransplantation (65).

Non-Heart-Beating Donors

Non-heart-beating donors (NHBD) are those donors who have experienced irreversible cessation of circulatory and respiratory function (66), in contrast to donors with a heartbeat (HBD) in whom death is determined according to neurologic criteria. By definition, a kidney from a NHBD experiences a prolonged phase of insufficient or complete lack of perfusion (i.e., warm ischemia), which is purported to cause irreversible damage and, therefore, poor short-term and long-term graft survival. In fact, the use of NHBD has been restricted almost exclusively to kidney transplantation, given the availability of dialysis for support should the graft not function posttransplantation. The Maastricht protocol is used by the majority of centers performing such transplants and requires a waiting time of 10 minutes after cessation of cardiac massage and mechanical ventilation before organ retrieval, with the diagnosis of death made by physicians independent of the procurement team (67). Furthermore, the protocol categorizes donors: category I, dead on arrival; category II, unsuccessful resuscitation; category III, cardiac arrest; category IV, cardiac arrest in a brain-dead donor. The use of NHBD represents an attempt to increase the donor pool in response to the increasing shortage of suitable cadaveric organs. Although there are numerous centers worldwide that have used NHBD since the early 1980s, the inherent ethical issues have prevented the practice from being widely embraced by most U.S. centers.

Several small single-centered studies have addressed the effect of NHBD on graft outcome, albeit with relatively short follow-up periods (68, 69, 70, 71, 72). An increase in DGF was seen in all cases compared with kidneys from HBD. In two of the studies, in which the majority of NHBD were category II or III, there was no significant difference in patient or graft survival at 1 and 3 years compared with recipients of HBD (70,71). In one of the studies, the serum creatinine was marginally higher at 5 years, emphasizing the importance of long-term follow-up in these recipients (70). Sanchez-Fructuoso et al (68) excluded donors of greater than 55 years and warm ischemia time greater than 120 minutes as part of their locally mandated policy whereby subjects who die on the street suddenly are transported immediately to the donation center. In their analysis, there was no significant difference in graft survival at 1 and 5 years for recipients of category I (68). Weber et al (67) reported long-term data on 122 NHBD kidney transplants, who received sequential therapy with antithymocyte globulin (ATG) initially, cyclosporine starting at day 10, and triple therapy with cyclosporine thereafter. Case-controlled recipients of HBD received triple therapy immediately posttransplantation. Confirming previous studies, DGF affecting kidneys from NHBD was approximately double that affecting kidneys from HBD. Ten-year graft survival was no different for kidneys from NHBD (78.7%) and HBD (76.7%). Interestingly, DGF had no effect on graft survival for kidneys from NHBD but was associated with decreased graft survival for those from HBD. More recently, Brook et al (73) demonstrated higher 6-year graft survival rates in patients with kidneys from NHBD with DGF than in patients with kidneys from HBD with DGF (84% versus 62%, respectively), despite a longer duration of DGF in the NHBD group. Although these results may be influenced by differences in immunosuppression between the two groups, it appears that initial fears held by transplant physicians and patients regarding the use of NHBD are not as great as anticipated and that NHBD represent an untapped source of cadaveric organs.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree