Authors

Year published

Simulator studied

Exercises performed in study

Number of participants in study

Validity assessed

Brinkman et al.

2013

dVSSS

Ring and rail 2

17 novices

Repetitions required

Colaco et al.

2013

RoSS

Ball drop

Current urology residents at Robert Wood Johnson University Hospital

Construct

Hung et al.

2013

dVSSS

Pegboard 2, ring and rail 2, suture sponge 3, tubes

38 novices, 11 experts

Construct

Stegemann et al.

2013

dVSSS

Ball placement, suture pass, fourth arm manipulation

65 novices

Attempted curriculum validity

Brinkman et al.

2012

dVSSS

Ring and rail 2

17 novices, 3 experts

Repetitions required

Finnegan et al.

2012

dVSSS

24 exercises

27 attendings, 3 fellows, 9 residents

Construct

Hung et al.

2012

dVSSS

17 exercises

24 novices, 3 experts

Concurrent, predictive

Kang et al.

2012

Mimic dvT

Tube 2

20 novices (medical students)

Repetitions required

Kelly et al.

2012

dVSSS

Camera targeting, energy switching, threading rings, dots and needles, ring and rail

19 novices, 9 intermediates, 10 experts

Face, content, construct

Lee et al.

2012

Mimic dvT

Pegboard ring transfer, ring transfer, matchboard, threading of rings, rail task

10 attendees, 2 fellows, 4 senior residents, 4 junior residents

Face, content, construct, concurrent

Liss et al.

2012

dVSSS and Mimic dvT

Pegboard 1, pegboard 2, tubes

6 students, 7 attendees, 7 junior residents, 6 senior residents, 6 fellows

Content, construct

Perrenot et al.

2012

dVSSS and Mimic dvT

Pick and place, pegboard, ring and rail, match board, camera targeting

5 experts, 6 intermediate, 8 beginners, 37 residents, 19 nurses/medical students

Face, content

Gavazzi et al.

2011

SEP

Arrow manipulation, surgeon’s knot

18 novices, 12 experts

Face, content, construct

Hung et al.

2011

dVSSS and Mimic dvT

10 virtual reality exercises

16 novices, 32 intermediates

Face, content, construct

Jonsson et al.

2011

ProMIS

Pull/loosen elastic bands, cutting a circle, suture tying, vesicourethral anastomosis

5 experts, 19 novices

Construct

Korets et al.

2011

Mimic dvT

15 exercises

16 residents

Concurrent

Lerner et al.

2010

Mimic dvT

Letter board, pick and place, ring walk, clutching cavity

12 medical students, 10 residents, 1 fellow

Concurrent

Seixas-Mikelus et al.

2010

RoSS

Clutch control, ball place, needle removal, fourth arm tissue removal

31 experts, 11 novices

Content

Seixas-Mikelus et al.

2010

RoSS

Basic ball pick and place, advanced ball pick and place

24 experts, 6 novices

Face

Kenney et al.

2009

Mimic dvT

Pick and place, pegboard, dots and numbers, suture sponge

19 novices, 7 experts

Face, content, construct

Sethi et al.

2009

Mimic dvT

Ring and cone, string walk, letter board

5 experts, 15 novices

Content, construct

Table 19.2

Advantages/disadvantages and confirmed validation of available robotic surgical simulators

Simulator | Pros | Cons | Validation |

|---|---|---|---|

Mimic da Vinci Trainer (dVT) | 1. Stand-alone unit | 1. Instruments not as robust as dVSSS | 1. Face |

2. 3D viewer | 2. Does not use actual console | 2. Content | |

3. Construct | |||

4. Concurrent | |||

Intuitive Surgical da Vinci Surgical Skills Simulator (dVSSS) | 1. Use of actual surgical console | 1. Not available when console used for surgery | 1. Face |

2. 3D viewer | 2. Content | ||

3. Potentially able to use as warm-up just prior to surgery | 3. Construct | ||

4. Concurrent | |||

RoSS | 1. Stand-alone unit | 1. Does not use actual console | 1. Face |

2. 3D viewer | 2. Content | ||

3. Construct | |||

SEP | 1. Stand-alone unit | 1. Lack of 3D viewer | 1. Face |

2. Content | |||

3. Construct |

Robotic Surgical Simulator System (RoSS)

The Robotic Surgical Simulator System (Simulated Surgical Systems, Williamsville, NY) is described as a stand-alone robotic simulator that includes a 3D display and hardware simulating the da Vinci surgical system console (Fig. 19.1). The software includes an algorithm that captures various metrics which in turn are used to generate a score that reflects the outcome of the completed exercises. The RoSS has had face validity [71] as well as content validity studied [72]. Seixas-Mikelus et al. recruited 42 subjects to use the simulator and complete surveys regarding their experience. The respondents indicated that 94 % thought the RoSS would be useful for training purposes. A high percentage of respondents were also in favor of using the RoSS for testing residents before OR experience and were also in favor of use in the certification of robotic surgery. Face validity was assessed in a separate study also authored by Seixas-Mikelus et al. [71]. Thirty participants answered questionnaires regarding the realism of the simulator, and the results indicated that 33 % of novices, 78 % of intermediates, and 50 % of experts thought the simulator were somewhat close. Sixty-seven percent of novices, 22 % of intermediates, and 40 % of experts thought it was very close. Ten percent of experts rated the simulator not close. Colaco et al. published a study in 2013 demonstrating construct validity of the RoSS [73]. In their study, it was found that training time, performance, and rating of the simulator by the participant were correlated.

Fig. 19.1

Robotic Surgical Simulator System (RoSS) (Courtesy of SimSurgery, Norway)

SimSurgery Education Platform (SEP)

SEP robotic trainer (SimSurgery, Oslo, Norway) is described as a stand-alone system with 21 exercises designed to train robotic surgical skills. Exercises are broadly divided into tissue manipulation, basic suturing, and advanced suturing. The face validity and content validity of this system have been verified in a study by Gavazzi et al. [74]. One noted drawback of the SEP compared to other available simulators was that it lacked the 3D viewer that is integrated into the da Vinci console. Thirty participants performing tasks involving arrow manipulation and tying a surgeon’s knot were studied to prove face, content, and construct validity. Participants rated the realism “highly” (90 %), confirming face validity. Construct validity was also verified as the expert group outperformed the novice group. It was noted that more metrics were seen to differ between the novices and experts in the knot-tying exercise compared to the place-an-arrow exercise. This was suggested to be due to the difficulty of the exercise, with the observation that it was easier to distinguish between novices and experts when the task complexity was increased.

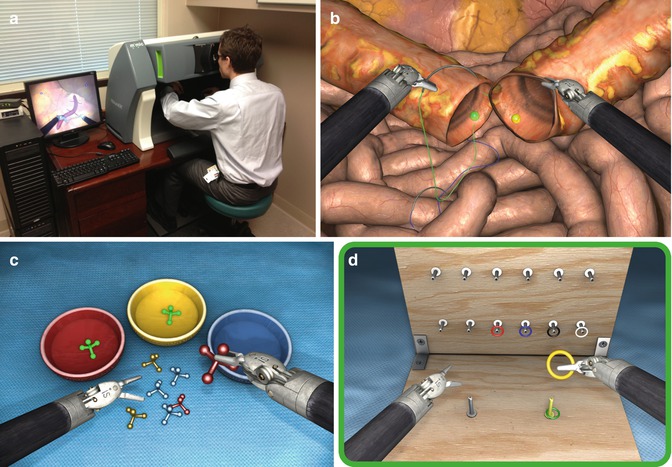

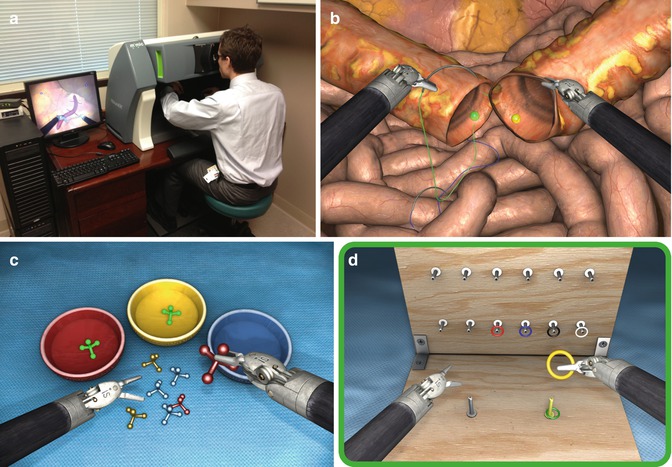

Mimic da Vinci Trainer (dVT)

Mimic dV-Trainer (Mimic Technologies Inc., Seattle, Washington) became available in 2007 and is a stand-alone unit for simulation of the da Vinci surgical system (Fig. 19.2). The unit includes foot pedals to simulate the equipment on an Intuitive Surgical da Vinci S or Si system. The scoring of the exercises is performed with an internal algorithm that generates a total score as well as separate scores for 11 other metrics. The face, construct, and content validity have been validated in various studies [75, 76]. In a study by Sethi et al., 20 participants underwent three repetitions of three separate exercises and completed questionnaires regarding the use of the simulator. The 15 novices were differentiated from the experts by their performance, thereby confirming the construct validity. Participants rated the realism of the simulator above average to high in all parameters investigated, confirming the face validity. Content was assessed by the expert surgeons and the conclusion was that exercises were valid for the training of residents. Kenney et al. demonstrated face, content, and construct validity on the Mimic dV-Trainer but utilizing a different set of exercises than Sethi et al. In their study, 24 participants underwent training with four exercises [77]. Experienced surgeons were again found to outperform novices for face validity. The simulator was rated as useful for training by the expert surgeons and the virtual reality representation of the instruments was found to be acceptable, but some of the exercises involving needle suturing into a sponge were found to be less than ideal.

Fig. 19.2

(a) Mimic da Vinci Trainer (dVT) in use at Indiana University Urology residents’ office, (b) tube exercise, (c) pick and place exercise, (d) pegboard exercise (b–d Courtesy of Mimic Technologies, Park Ridge, IL, USA)

The concurrent validity of the Mimic dVT has also been validated [78, 79]. Lerner et al. and Korets et al. both looked at concurrent validity of the Mimic trainer compared to inanimate exercises on the da Vinci console. Korets et al. had three groups of participants. One group completed 15 exercises in the Mimic curriculum and was considered to pass when all exercises had a minimal score of 80 %. The second group had a 90 min personalized session with an endourology fellow serving as mentor. The third group had no additional training. The results demonstrate improvement of performance for the two groups who had training over the control group (no training). The difference in improvement comparing the Mimic and the da Vinci training group was insignificant, leading Korets et al. to conclude that either method (VR or inanimate training) was acceptable in improving skills.

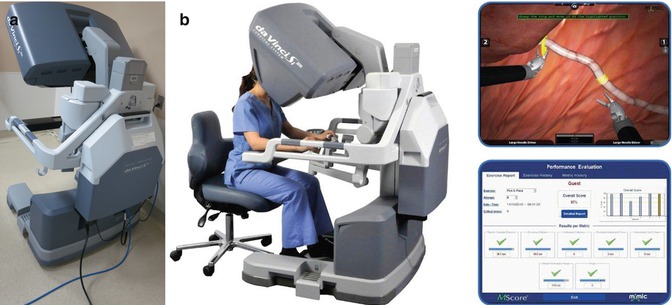

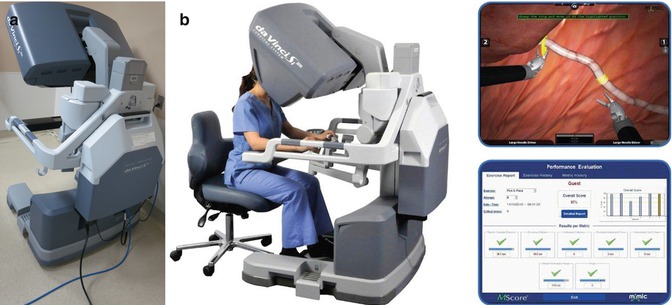

da Vinci Surgical Skills Simulator (dVSSS)

The da Vinci Skills Simulator (Intuitive Surgical, Sunnyvale, CA) is a backpack unit that attaches to the da Vinci Si or Si-e surgical system and allows the user to perform virtual reality exercises on the robotic console (Fig. 19.3). The exercises were developed in collaboration with Mimic Technologies and Simbionix. Broad categories of training exercises include camera/clutching, fourth arm integration, system settings, needle control/driving, energy/dissection, EndoWrist manipulation, and knot tying.

Fig. 19.3

(a) da Vinci Surgical Systems Simulator (dVSSS) at Indiana University main operating room, (b) dVSSS attached to console, example of ring walk exercise, and associated metrics (b, Courtesy of Intuitive Surgical, Inc., Sunnyvale, CA, USA)

Finnegan et al. published an industry-sponsored study demonstrating the construct validity of the dVSSS in 2012 [80]. In their study, the participants completed all 24 exercises within the Mimic software on the dVSSS. Their statistical analysis demonstrated that within certain exercises construct validity was present. The participants were divided into three groups according to the number of robotic cases previously performed. Significant differences were seen within certain metrics in 15 of the exercises that they studied. The authors suggested that certain exercises were better than others at distinguishing between groups of trainees and that this would have to be optimized in the future with further studies. Exercises that were not valid could be removed from a potential curriculum.

Groups out of University of Southern California (USC) and Thomas Jefferson University have published studies on the validity of the dVSSS [81, 82]. Both groups confirm that the simulator has face, content, and construct validity. Hung et al. commissioned their study as a prospective study with involvement of experts from urology, cardiothoracic surgery, and gynecology. Participants in both studies rated the realism of the simulator highly with scores of 8/10 in Hung’s study [82] and 4.1–4.3 out of 5 in Kelly’s study [81]. Content validity was confirmed in the USC study, with the experts outperforming the rest of the participants in the study in nearly all measures. In contrast to this, the group out of Thomas Jefferson University found that content validity was present only when their intermediate and expert participants were combined into a single group. They reasoned that this was due to differences in study design, including sample size, number of repeats of each exercise, exercises studied, and method of defining experience levels (grouping). Content validity was established in both studies as the experts determined that the simulator was a good tool for training residents and fellows.

Other Methods of Education for Robotics Training and Comparison Between Available Robotic Simulators

Although there is research data on each robotic simulator individually, limited data is available comparing the simulators to each other. This makes it difficult to decide on what the standard should be in robotic surgery simulation [83]. Whereas laparoscopic surgery has a validated curriculum involving the FLS, there still remains work to be completed in the domain of robotic surgical simulation to achieve the same end point. In this vein, some studies on robotic simulation have begun to look at how the simulators compare to validated training exercises. Hung et al. studied three options for robotic surgery education, which included inanimate tasks, virtual reality simulation, and surgery on a live porcine model. Each method was identified to have a different set of advantages and disadvantages. The inanimate task is cost-effective, but requires the availability of an extra robot or the use of an existing robot during off-hours. The virtual reality simulator is currently costly but can be made more readily available for trainees if it is a stand-alone unit. The porcine model is considered high fidelity since it models the tissues most similarly to the real surgery but is also very costly, requires a lot of additional preparation, and requires that the robot be available for trainees to use. Despite differences within each method of education, Hung et al. demonstrated that there was correlation of performance across the three modalities. Both the VR exercises (four selected exercises) and the inanimate exercises used in the study were previously validated for construct validity. The results of this study also demonstrated construct validity as the experts outperformed the novices in the tasks evaluated. The authors concluded that all modalities were useful and targeted the same skill sets.

One of the few studies that examine differences between the simulators is by Liss et al. who conducted their study to compare the construct validity and the correlation between the Mimic dVT and the dVSSS [84]. Participants including medical students, residents, fellows, and staff urologists were instructed to perform exercises first on the dVSSS and then on the dVT. Exercises included the pegboard 1, pegboard 2, and tubes. They were evaluated by metrics provided within the software that had been previously validated. Results indicated that there was a correlation between the simulators in the suturing task, which was deemed as the most difficult and relevant task. Both simulators were identified to have good construct validity, as they were able to distinguish between the participants of various skill levels. The authors discussed the advantages and disadvantages of each system, finding that the Mimic dVT was easier to access because it is a stand-alone system. Both systems had a similar cost at an estimated $100,000. The dVSSS was found to have better face and content validity as rated by the study participants. Another benefit identified for the dVSSS was that since the system is attached to the console used for the actual surgery, it can serve as a warm-up just prior to surgery. Conversely, a drawback of the simulator being attached to the actual console is that it also precludes use of the system for practice when a surgery is being performed. Hung et al. suggested that the limitations of the Mimic dVT are such that it does not utilize the actual da Vinci console and therefore lacks the same realism compared to the dVSSS [82].

At the Indiana University urology department, Lerner et al. studied participants undergoing training with the Mimic dVT and assessed improvements in task completion on the da Vinci surgical system while performing inanimate exercises [78]. Results demonstrated that the novice learners utilizing the dVT improved their baseline scores in pattern cutting and pegboard times. Compared to a group consisting of novice residents, the medical students were seen to make similar improvements in time and accuracy between the initial and final sessions. The virtual training was seen to be beneficial in improving outcomes for exercises that were similar in the inanimate exercise set on the dVSSS. The Mimic trainer was therefore identified as a tool for novices to familiarize themselves with the basic mechanics of robotic surgery. Through simulator practice, a decrease in instrument collisions and an increase in comfort with the use of the foot pedals/console controls were derived and thought to result in improved performance on the actual console.

Hung et al. demonstrated the benefit of virtual simulation with the da Vinci Skills Simulator on the performance of wet lab skills that mimicked surgery [85]. Tasks included bowel resection, cystotomy and repair, and partial nephrectomy in wet lab. Evaluation was carried out by experts utilizing the GOALS metrics which were described by Vassilou et al. as a rating scale for evaluation of technical skills in laparoscopy [86]. Simulation involved the completion of 17 selected tasks. The trainees with the lowest baseline scores on the da Vinci Skills Simulator were found to have the most benefits in improvement. This suggested that the trainees that require remedial work may benefit the most from additional simulator time. Furthermore, they found correlation between the trainees’ scores in the virtual simulation and the wet lab exercises. Excessive tissue pressure, collisions, and time were also found to have correlation.

Robotic Surgery Training Curves and Applicability of Simulator to Expert Robotic Surgeons

Although it is generally agreed upon that simulator training is safe and helps novice trainees attain the basic skills required to operate the robotic console, there is less documented benefit when the simulator is assessed in the context of educating expert surgeons. In order to better understand these phenomena, we look at studies that have identified the shape and duration of learning curves of trainees on the simulator. A number of recent studies have also delved into the reasons why certain metrics on the robotic simulators may not be appropriate for assessing the expert robotic surgeon.

Kang et al. studied the number of repetitions required for a novice to train in a given exercise on the Mimic dV-Trainer [87]. They selected “Tube 2” program which is representative of the urethral anastomosis in a robotic-assisted prostatectomy. The participants consisted of 20 medical students who were considered naïve of the surgical procedure. No comparison to expert performance was included in this exercise. Analysis was carried out to identify the number of repetitions required for the slope to plateau, indicating a stable performance level of the exercise. Seventy-four repetitions were identified as the number that was required for a plateau. The authors concluded that the participants could start at a naïve level and spend 4 h to complete the 74 repetitions required to reach mastery of the exercise. A caveat noted was that mastery of the exercise in the simulator was not necessarily indicative of performance in real surgery. A drawback of the study was that the primary factor that was assessed was time for completion of the task, which is not necessarily the best metric. Real surgery involves more than quick completion of a procedure and may also involve careful dissection to achieve dissection in appropriate tissue planes. Studies or exercises only assessing time may be overlooking other critical metrics. Jonsson et al. also commented on the applicability of tasks to the intermediate trainee and expert surgeon. They recommended that sufficiently complex training exercises be included as the construct validity may not be valid on simple tasks [88].

Brinkman et al. also studied the number of repetitions needed for skills acquisition on a robotic simulator [89]. Similar to Kang et al. they looked at repetitions of a single exercise. Ring and Rail II was utilized in the da Vinci Skills Simulator. Utilizing a similar cohort comprised solely of medical students, they examined the effect of exercise repetition on performance and attempted to find the number of trials to reach expert level. Expert performance was determined by having three participants that had greater than 150 robotic cases perform the exercises. The results support that of Kang et al. in that ten exercises were not sufficient for the novices to reach an expert level of performance. The study looked at multiple variables, not just time, for task completion, and the authors concluded that certain metrics were better suited to assess novices versus experts. Furthermore, they noted that some metrics such as time could be negated by poor instrument use involving the clashing of arms and excessive tension. In other words, the simulator could provide basic assessment of skills, but this may not necessarily transfer directly to real surgical performance, since a real surgery is multifaceted. Interestingly, not all metrics were seen as applicable to experts.

Kelley et al. also commented on the differing degrees of complexity of the exercises and surmised that certain exercises may be more applicable to experts [81]. This is further supported by Hung et al. who surveyed experts and found them to be in agreement that the simulator was limited in relevance for the expert in robotic surgery [82]. In regard to why certain metrics may not be applicable, Perrenot et al. identified the “camera out of view” metric as something that may not be applicable to experts who are used to having instruments out of camera view but are still aware of their exact location within the abdomen or working field [75].

< div class='tao-gold-member'>

Only gold members can continue reading. Log In or Register to continue

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree