Approach to the Highly Sensitized Patient

Joseph M. Nogueira

Eugene J. Schweitzer*

Division of Nephrology, Department of Medicine; and *Division of Transplantation Surgery, Department of Surgery, University of Maryland, Baltimore, Maryland 21201

INTRODUCTION

All recipients of genetically non-identical solid organ transplants are at risk for rejection initiated by alloantigens and must be pharmacologically immunosuppressed to prevent rejection mediated by T-cell recognition of these nonself human leukocyte antigens (HLA) molecules as foreign. However, a subset of potential transplant recipients is also at risk for rejection initiated by alloantibodies if they were exposed pretransplant to foreign antigens that are also present on the allograft. When these preexisting alloantibodies are directed against ABO group antigens, HLA class I antigens, endothelial-monocyte antigens, and perhaps HLA class II antigens that are also present on the allograft, they can initiate immediate (hyperacute) or delayed humoral immune responses against the graft (1,2). Patients who possess such alloantibodies prior to transplantation are considered to be “sensitized.” Highly sensitized patients, especially those who have high levels of circulating anti-HLA class I antibodies, face significant difficulties in finding a compatible donor and encounter a worse prognosis for the organ after transplantation (3,4). As such, these patients present difficult challenges to transplant physicians and to organ allocation systems. Until recent years, these patients faced hopelessly long waits for a crossmatch-negative kidney. However, in the past few years much progress has been made in managing highly sensitized patients by more optimally pairing the recipient with a compatible organ and, more recently, by suppressing the powerful humoral immune response elicited by the alloantibodies.

DEFINITION, QUANTITATION, AND MONITORING OF SENSITIZATION

Sensitization is defined as the presence of preformed alloantibodies in the serum of a prospective transplant recipient (3). In other words, it is pretransplant humoral alloimmunization. These alloantibodies are usually anti-HLA class I antibodies but may also include anti-HLA class II or non-HLA antibodies (3). They are formed in response to prior exposure to foreign antigens encountered during events such as blood transfusions, prior transplants, and pregnancies (5). Of note, in addition to this humoral sensitization, there also appears to be donor-reactive T-cell sensitization (“cellular sensitization”), which is measured with a delayed type hypersensitivity assay (6). To what degree this phenomenon may be present pretransplant and manifest posttransplant is not clear.

Anti-HLA antibodies are conventionally known as panel reactive antibodies (PRA), and they are quantified as the percentage of PRA that are reactive. Historically, this has been determined by testing the potential recipient’s serum against a panel of lymphocytes harvested from 40 to 60 HLA-typed individuals who were chosen to represent the widest variety of HLA antigens, and an antibody-activated, complement-dependent cytotoxic (CDC) assay is used to detect antibodies against donor lymphocyte surface antigens in the recipient serum. The breadth of variety of antibodies

present in the potential recipient’s serum is determined by calculating the percent of donors in the panel whose cells are killed. Currently, there is a variety of assays used to measure the PRA (7). A discussion of the intricacies of the various methods is beyond the scope of this chapter and is addressed in an earlier chapter. However, in order to clarify what each test can reveal about a given recipient’s level of sensitization, a discussion of some salient aspects of the methods that may be used in this setting will follow.

present in the potential recipient’s serum is determined by calculating the percent of donors in the panel whose cells are killed. Currently, there is a variety of assays used to measure the PRA (7). A discussion of the intricacies of the various methods is beyond the scope of this chapter and is addressed in an earlier chapter. However, in order to clarify what each test can reveal about a given recipient’s level of sensitization, a discussion of some salient aspects of the methods that may be used in this setting will follow.

The National Institutes of Health (NIH) standard technique, which has been used since the 1960s, essentially involves isolating each donor’s peripheral T cells, placing them in individual wells of a plate, adding recipient serum, incubating, adding rabbit complement to induce killing of cells if complement-fixing antibodies directed against the donor lymphocytes are present in the recipient serum, and then distinguishing live from dead cells using a vital dye. A technologist then identifies which individual donor wells demonstrated cytotoxicity and scores the PRA as the fraction (expressed as percentage) of donors whose cells elicit a positive reaction. The sensitivity of this technique in identifying antidonor antibodies suffers if the antibodies are not efficient enough or are not present in sufficient numbers to activate complement. The antihuman globulin (AHG)-enhanced technique includes the addition of antihuman immunoglobulin (Ig) antibody to augment the ability of the recipient antibodies to activate complement by providing crosslinking molecules, and thereby it increases the sensitivity of detecting these alloantibodies. Both of these cytotoxic assays (especially the more sensitive AHG-enhanced technique) will generally detect not only antibodies directed against HLA but also those directed against other non-HLA antigens that reside on lymphocytes. The latter antigens should not be present on the allograft cells and may therefore be clinically irrelevant. Also, the assays may not differentiate between IgG and IgM antibodies, and this distinction can be important because IgM antibodies are generally considered to be benign in this context as they are generally not directed at HLA antigens and are often drug induced. Dithiothreitol (DTT) destroys IgM and therefore is added to eliminate the effect of IgM in these PRA assays. However, it may also cause a weak IgG antibody response to disappear, thereby diminishing the sensitivity of the assay.

The flow cytometry technique uses soluble HLA molecules that are bound to beads, which are used in place of live donor T cells. The HLA molecules are either class I or class II and are, as with the cytotoxic assays, chosen from typed individuals with a wide spectrum of HLA antigens. Recipient serum followed by antihuman antibodies labeled with a fluorochrome incubate the beads, and finally the antibody-labeled beads are passed through a flow cytometer that measures the intensity of light emitted from the fluorochromes and mathematically converts it into a percent PRA. Still another method uses enzyme-linked immunoabsorption (ELISA) methodology rather than flow cytometry to detect antibodies. The main advantage of these two molecular HLA (as opposed to live cellular) assays in the field of pretransplant PRA testing is that they specifically detect anti-HLA antibodies and not other molecules on the lymphocyte that have no significance in alloreactivity. Other advantages include: (a) these flow cytometry and ELISA techniques are considered to be more sensitive than the complement-dependent cytotoxicity assays, (b) they can distinguish between IgG and IgM antibodies, and (c) they can determine if the antibodies are directed against HLA class I, class II, or both. Moreover, because ELISA reactions can be examined in a multiwell plate, determination of the specificity of HLA antibodies is possible with this technique.

Although various regulatory bodies (e.g., United Network for Organ Sharing [UNOS], Health Care Financing Administration,) may dictate which tests to use and how often they should be done, a PRA should generally be measured at least every three months on all patients on the cadaveric waiting list. Because kidney transplant candidates with high PRAs statistically face longer wait list times for compatible organs, they are given priority in the cadaveric renal allograft allocation system for potentially compatible organs. As discussed later, PRA levels are instrumental in guiding cost-efficient and (cold ischemia) time-efficient crossmatching immediately prior to cadaveric renal transplantation, and they impact greatly on posttransplant allograft outcome.

Various terms and definitions have been used to grade the degree of sensitization. Although there appears to be a graded effect on outcomes (8), the term “highly sensitized” or “broadly sensitized” is applied to those who have a PRA of at least 30% to 50%. A designation of “unsensitized” has been applied to patients with a PRA of 0% to 10%. Some authors hold that the peak pretransplant PRA is more predictive of graft outcome than the PRA level at the time of surgery (8,9) and that therefore the sensitization status of a given patient may best be determined by the highest pretransplant PRA. Other investigators have argued that only those antibodies present in the serum at the time of transplantation are relevant to outcome (10). The peak PRA may be less pertinent if histocompatibility laboratories have a conscientious sampling and screening program that excludes recipients from receiving allografts that harbor mismatched antigens against which the recipient has ever developed specific antibodies (11).

IMPORTANCE OF SENSITIZATION

The Scientific Registry of Transplant Recipients data indicates that 19.5% and 14% of patients on the 2002 kidney transplant wait list have a peak PRA of 10-79% and >80% respectively (51). Although immediate graft loss due to hyperacute rejection (HAR) historically was a common occurrence in these patients, this problem has been largely eliminated using sensitive crossmatch techniques. Still, sensitized patients wait longer for compatible allograft (3), are at increased risk for early acute humoral rejection (12, 13, 14, 15, 16), and have worse short-term and long-term outcomes (4).

In contrast to the universal use of PRA in the pretransplant setting, posttransplant measurements of humoral alloreactivity (PRA) are rarely measured and used clinically. However, such posttransplant alloantibodies may be clinically important. A recent review by McKenna et al (17) cited more than 23 studies that showed that the presence of anti-HLA antibodies posttransplant (identified with assays using either donor lymphocytes or a panel of HLA antigen targets) is associated with acute and chronic rejection as well as decreased graft survival in various transplanted organs, including kidneys. Renal transplant patients with posttransplant HLA alloantibodies were five to six times more likely to develop chronic rejection (18,19). Animal and in vitro human models suggest that a repair response to donor-specific antibodies may result in arterial thickening associated with chronic rejection (20). To what extent patients with pretransplant sensitization may be at risk for this phenomenon of posttransplant alloantibody-induced graft pathology and to what extent posttransplant humorally mediated rejection involves a de novo versus an amnestic response is not yet clear.

CAUSES OF SENSITIZATION

There are three primary sources of sensitization of kidney transplant patients: pregnancy, blood transfusions, and prior transplants (5,8). All three of these situations may present the potential recipient’s immune system with “a look at” foreign antigens, including HLA molecules. If the potential recipient is not immunosuppressed, he or she will appropriately produce antibodies against these alloantigens. These sensitizing events appear to have a cumulative and interacting impact on the PRA.

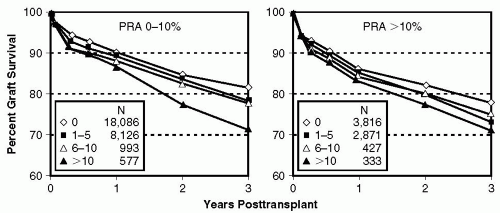

Blood transfusions. Early in the history of solid organ transplantation, the “transfusion effect” was observed by Opelz et al (21) and others (22) when they demonstrated a benefit on graft outcome if preoperative blood transfusions were given in combination with immunosuppressive drugs or x-radiation (23,24). The mechanism of this beneficial effect has still to this day not been elucidated. It has been theorized that (a) it may allow for preselection of a population with a high response (that later will presumably fail crossmatch when reexposed to antigens), (b) it may induce clonal deletion or activate suppressor mechanisms of alloreactive T cells, or (c) it may block allo- or anti-idiotypic antibodies (25). Whatever the case, over the years it has also become clear that blood transfusions may also induce sensitization, and by the late 1990s the previously noted beneficial “transfusion effect” had given way to a deleterious effect, with worsening graft survival associated with the greater numbers of transfusions in sensitized and nonsensitized patients (26) (Fig. 5.1).

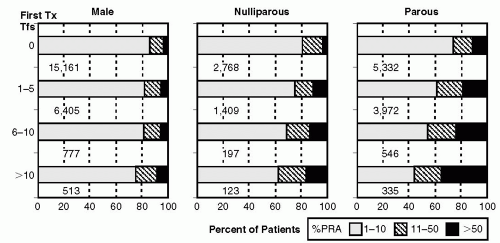

Prior transplants and pregnancies. Visual comparison of Figure 5.2 and Figure 5.3 demonstrates (albeit without formal statistical analysis) that the rate of sensitization seems greater in regraft as compared with initial graft recipients. The sensitizing effect of pregnancy appears to be more important in initial transplants (Fig. 5.2) than in retransplants (Fig. 5.3). Figure 5.2 demonstrates that increasing numbers of transfusions are associated with increasing PRAs, and the effect seemed to be modulated by sex and pregnancies, as the sensitizing effect was greater in parous females compared with nulliparous females and was greater in nulliparous females than in males. It has been hypothesized that pregnant women may be sensitized at the time of delivery with exposure to parental HLA antigens expressed by fetal cells (27).

Other as yet unidentified factors. Among patients receiving their first kidney transplant with no known history of blood transfusions, approximately 20% of nulliparous women and 13% of men were sensitized (PRA > 10%) (26). It is not clear how such a large proportion of patients lacking risk factors became sensitized. As this observation was noted in patients who actually received a kidney and as the prevalence of sensitization is overall higher in wait-listed patients, it is likely that a higher percent of such patients on the transplant wait list may be sensitized. Certainly, underreporting of these sensitizing events may have occurred.

Additional factors in women may have included unrecognized pregnancies, alloantigenic stimulation from sperm, or an augmenting estrogen effect (28).

Additional factors in women may have included unrecognized pregnancies, alloantigenic stimulation from sperm, or an augmenting estrogen effect (28).

PATHOGENESIS OF SENSITIZATION

Essentially, sensitization develops when a nonimmunosuppressed patient is exposed to foreign human cells that have HLA molecules or other surface antigens that are recognized as nonself. A humoral response ensues, and it appears to be initiated via the T-cell dependent, Th2 cytokine-driven humoral response (as opposed to T-cell independent B-cell activation). However, because B cells and plasma cells have short life spans, it is not clear how a patient may sustain an anti-HLA antibody response and thus a high PRA without ongoing antigenic stimulation. Possible explanations include (a) the persistence of residual donor protein antigens in long-lived follicular dendritic cells, (b) the presence of cross-reactive environmental antigens, or (c) the development of chimerism (the presence of donor stem cells in the host) or microchimerism (small numbers of such cells) (29). In support of the theory of a chimeric mechanism, one group has identified nongenomic DNA in sensitized patients (Y chromosome material in sensitized females) (29), and another group demonstrated more than two HLA-DR antigens in a higher percentage of sensitized as compared with nonsensitized individuals (30).

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree